long term vs longtermism

how a term gets politically loaded and goes bat shit crazy

We are all familiar with long term thinking, right? Whether it is family schedules, budget planning, or large scale government spending, one needs to look ahead to the future to survive, grow, and prosper. Of course, short term thinking is easy. There are also trade offs between short and long term thinking. We tend to put off certain type of decisions (either long or short term) if negative emotional states are involved. For government and policymakers, often long term thinking involves data, statistics, and projections.

So far, so good? In these uncertain times, we can agree that there are values in long term thinking (even it might not be easy or could be error prone). Some scholars argue that we are not that good at long term thinking — this is particularly true in our social media scrolling friendly, digital, cyborg lifestyle that supports instant gratifications. According to Richard Slaughter, in an 1996 article:

While all normal individuals have the capacity for foresight, and some institutions use it for specific purposes, nations on the whole have very little. Foresight declines markedly when we move from individuals to organizations, and again from these to societies. Why is this? There are many reasons. One of them is public scepticism: too many believe that you cannot know anything about the future. Another is that, as noted, short-term thinking is endemic. Most of those charged with political leadership are seldom actually leading in a way that is informed by the near-future context; rather, they are managing or administering on the basis of past experience. Another is avoidance, pure and simple. At some level people don’t want to know about tomorrow; today is quite hard enough. Not far from this is fatalism. One educated person said to me: ‘we know the planet is done for, so why should we bother?’

Slaughter and others are part of a field called “Future Studies” which origin can be traced back to great thinkers such as Ibn Khaldun (born 1332 - died 1406). The idea is to look at historical patterns and think about foresight and sustainability.

Are you still with me? Good. This is where things get interesting. Now, with this idea of long term thinking and sustainability add a dash of post-pandemic apocalyptic madness, maybe sprinkle in some libertarianism and billionaire philosophising. And don’t forget your regular dose of eugenics and maybe stir some Q-Anon friendly conspiracy theory ripe for viral outbreak. And of course top it off with Artificial Intelligence — viola! we have “Longtermism.” The term, at first, is not that bad -- it is very similar to Future Studies -- that we need to think long term, future people count. But then..

In the past few months, there has been a lot written about this. I will highlight a few prominent points here with some links and tweets. The basic idea is this: we need to think really, really, really long term and we need to spend a lot of money on AI and digital-cyborg stuff, and we need to breed high IQ people. All these recent frenzy probably started around 2011 with William MacAskill who defines longtermism as “the view that positively influencing the longterm future is a key moral priority of our time”. Adding this moral dimension is where things get tricky and diverges from Future Studies.

A lengthy piece by Émile P. Torres has a summary of the term and the frenzy behind it:

Consider the word "longtermism," which has a sort of feel-good connotation because it suggests long-term thinking, and long-term thinking is something many of us desperately want more of in the world today. However, longtermism the worldview goes way beyond long-term thinking: it's an ideology built on radical and highly dubious philosophical assumptions, and in fact it could be extremely dangerous if taken seriously by those in power.

So where does it get crazy? Is it wrong to have this moral and existential approach to our future generation? The proponent of longtermism also differentiates between “weak” and “strong” longtermism. Worry about climate change, ok good. But that is not enough. Here’s another article published in Vox about this crazy idea:

Strong longtermism, as laid out by MacAskill and his Oxford colleague Hilary Greaves, says that impacts on the far future aren’t just one important feature of our actions — they’re the most important feature. And when they say far future, they really mean far. They argue we should be thinking about the consequences of our actions not just one or five or seven generations from now, but thousands or even millions of years ahead.

Their reasoning amounts to moral math. There are going to be far more people alive in the future than there are in the present or have been in the past. Of all the human beings who will ever be alive in the universe, the vast majority will live in the future.

If our species lasts for as long as Earth remains a habitable planet, we’re talking about at least 1 quadrillion people coming into existence, which would be 100,000 times the population of Earth today. Even if you think there’s only a 1 percent chance that our species lasts that long, the math still means that future people outnumber present people. And if humans settle in space one day and escape the death of our solar system, we could be looking at an even longer, more populous future.

Are you still with me? Maybe this is still ok? Here’s a quote from 2019 paper by MacAskill and Greaves:

For the purposes of evaluating actions, we can in the first instance often simply ignore all the effects contained in the first 100 (or even 1000) years, focussing primarily on the further-future effects. Short-run effects act as little more than tie-breakers.

So that is why climate change and current environmental crisis are just short term or “weak longtermism”. Real men think in strong longtermism! And this is where the digital, cyborg stuff makes this whole thing bat shit crazy. Instead of thinking about the climate we need to work on AI and the "digital people". Here’s a quote from another excellent piece in Salon.com by Torres:

In practical terms, that means we must do whatever it takes to survive long enough to colonize space, convert planets into giant computer simulations and create unfathomable numbers of simulated beings. How many simulated beings could there be? According to Nick Bostrom —the Father of longtermism and director of the Future of Humanity Institute — there could be at least 1058 digital people in the future, or a 1 followed by 58 zeros. Others have put forward similar estimates, although as Bostrom wrote in 2003, "what matters … is not the exact numbers but the fact that they are huge."

And what about eugenics, you ask? One of the existential risks listed by Nick Bostorm is the “dysgenic pressure”:

It is possible that advanced civilized society is dependent on there being a sufficiently large fraction of intellectually talented individuals. Currently it seems that there is a negative correlation in some places between intellectual achievement and fertility. If such selection were to operate over a long period of time, we might evolve into a less brainy but more fertile species, homo philoprogenitus (“lover of many offspring”).

Some of these ideas derive from the term “transhumanism” that was popularized by Julian Huxley, president of the British Eugenics Society from 1959-1962.

If your head hurts, you are not alone. But be cautious and worried. Again from Torres:

[..] longtermism is arguably the most influential ideology that few members of the general public have ever heard about. Longtermists have directly influenced reports from the secretary-general of the United Nations; a longtermist is currently running the RAND Corporation; they have the ears of billionaires like Musk; and the so-called Effective Altruism community, which gave rise to the longtermist ideology, has a mind-boggling $46.1 billion in committed funding. Longtermism is everywhere behind the scenes — it has a huge following in the tech sector — and champions of this view are increasingly pulling the strings of both major world governments and the business elite.

and most recently:

And if you want to follow and learn more check out these tweets:

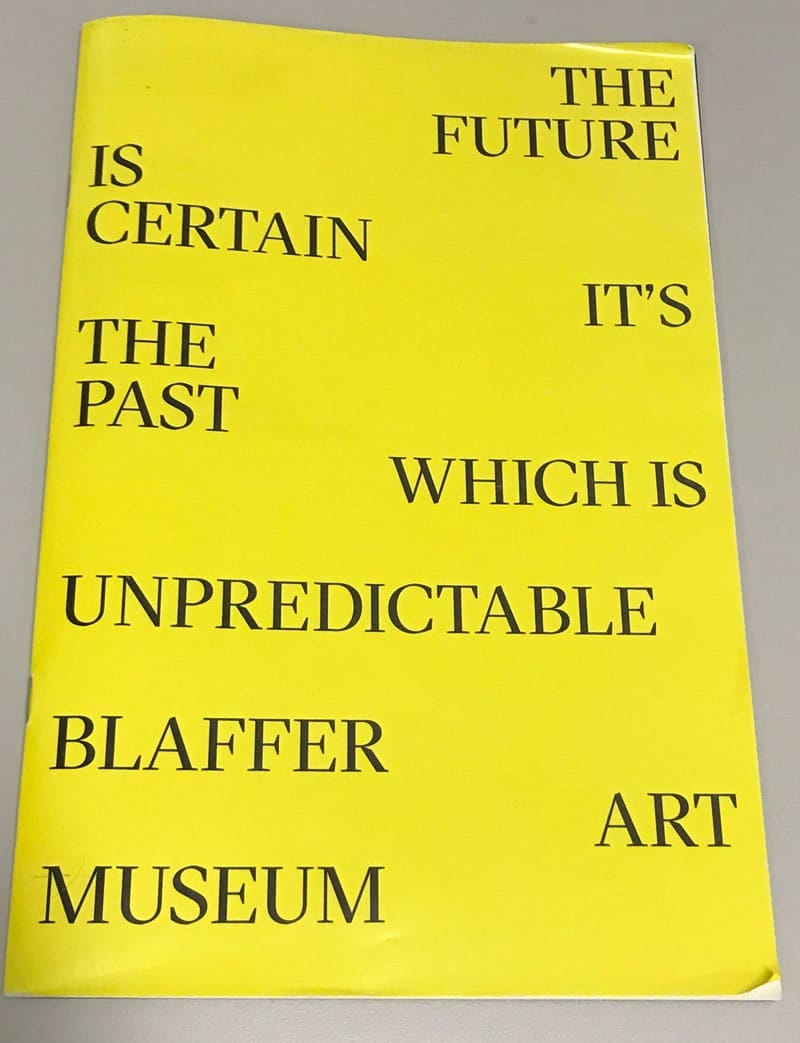

I will end with an old soviet joke — just make the mood a bit lighter. Maybe we should just back to the past.

Good luck and good night!

Great introduction to the topic/issue - who knew! Conventional wisdom would have suggested (I think) that we have to leave future decisions to ... future generations, but without screwing things up for them first. But it does seem clear to me that Eugenics is not going away (the pressure to breed an "intelligent" species seems like it will only increase) and, well, I know I can't think that far ahead.